I’ve been creating content for years. YouTube videos, blog posts, tweets, podcast appearances, internal docs for my company. Thousands of pieces scattered across platforms and folders.

Here’s the problem: I can’t remember what I’ve said.

Not in a concerning way. In a “did I already share that framework?” or “what was that thing I said about distribution vs product?” way. My past content exists, but I can’t access it when I need it. When I sit down to write something new, I’m starting from scratch instead of building on foundations I’ve already laid.

The Inspiration

I was listening to a podcast where Caleb Ralston (a personal branding creator on YouTube) mentioned that his team had built an “AI database” of all his historical content. They transcribed every video he’d ever appeared in and turned it into something searchable. It let them understand his existing talking points, find frameworks he’d already developed, and maintain consistency across content.

The concept stuck with me. What would it look like to build something similar for myself?

What I Built

A local semantic search engine that can answer questions about my own content. The entire system runs on my laptop. No cloud services, no API costs after setup, complete privacy.

The stack is surprisingly simple:

- ChromaDB for vector storage

- Ollama for local embeddings (nomic-embed-text model)

- Python script to ingest and query

- Markdown as the universal format

Total setup: maybe 200 lines of code.

How It Works

- Collect content – YouTube transcripts (downloaded via yt-dlp), blog posts, docs, anything in text form

- Chunk it – Split documents into ~500 word segments with overlap

- Embed it – Convert each chunk to a vector using Ollama locally

- Store it – ChromaDB persists everything to disk

- Query it – Semantic search returns relevant chunks for any question

# Ingest all content

uv run build-kb.py --ingest

# Ask questions

uv run build-kb.py --query "What have I said about content systems?"

uv run build-kb.py --query "My thoughts on distribution vs product"The “semantic” part matters. I’m not doing keyword matching. When I ask about “content systems,” it returns chunks that discuss workflows, automation, and publishing pipelines—even if those exact words aren’t used. The embedding model understands meaning, not just strings.

The Obsidian Connection

Here’s where it gets interesting.

My entire working directory is a folder of markdown files. Blog posts, notes, drafts, transcripts—all .md files in a structured hierarchy. That folder is also an Obsidian vault.

Obsidian gives me:

- Visual browsing – Navigate content through a nice UI

- Linking – Connect related ideas with

[[wiki-style links]] - Graph view – See how concepts cluster together

- Search – Quick full-text search when I know what I’m looking for

The knowledge base adds:

- Semantic search – Find content by meaning, not keywords

- Cross-reference discovery – “What else have I said that’s similar to this?”

- Topic clustering – Analyze patterns in what I talk about most

They complement each other. Obsidian for browsing and organizing. The knowledge base for querying and discovering.

What I Discovered

After ingesting ~400 chunks from my content, I ran an analysis to find topic clusters. The results were illuminating:

| Topic | Frequency |

|---|---|

| Claude Code / AI automation | 86 mentions |

| Content systems & workflows | 75 mentions |

| Marketing & business | 106 mentions |

| Founder productivity / goals | 62 mentions |

The phrase “claude code” appeared 38 times in my personal brand content. “Content” appeared 131 times. These are the themes I return to constantly.

More useful than the raw counts were the semantic clusters. When I queried “What have I said about content systems?”, I got back chunks from:

- A blog post about growth engineering with Claude Code

- A YouTube video called “Creating a Content System”

- Internal documentation about creative direction

Content I’d forgotten I made. Ideas I’d already articulated that I can now build on instead of recreating.

The Broader Pattern

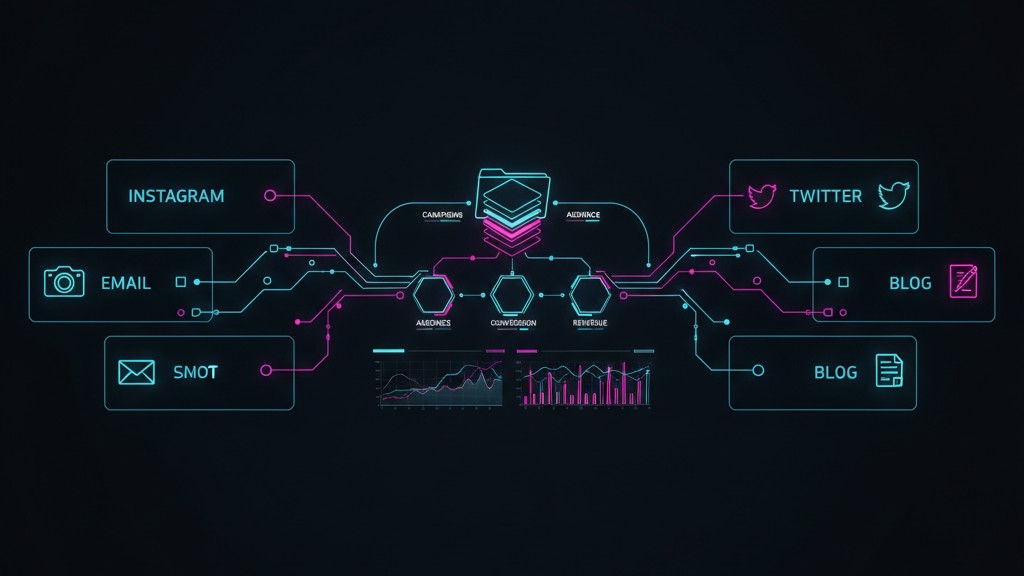

This is part of something I’ve been calling “growth engineering”—treating marketing infrastructure like software infrastructure. The knowledge base is one component.

The full system looks like this:

Working Directory (Obsidian Vault)

├── posts/ # Blog content

├── content/ # Thought leadership drafts

├── knowledge-base/ # Vector DB + scripts

│ ├── youtube-transcripts/

│ ├── chroma-db/

│ └── build-kb.py

└── products/ # Product pages and docsEverything is markdown. Everything is version controlled. Everything is queryable.

When I want to write something new:

- Query the knowledge base: “What have I said about [topic]?”

- Review existing content in Obsidian

- Build on what exists instead of starting fresh

- Publish through the same markdown → WordPress pipeline

The AI isn’t writing my content. It’s helping me remember and organize what I’ve already created. The knowledge base becomes institutional memory for a one-person operation.

How to Build Your Own

If you want to try this, here’s the minimal setup:

1. Install Ollama

brew install ollama

ollama serve

ollama pull nomic-embed-text2. Create the ingestion script

The core is maybe 100 lines. Collect documents, chunk them, embed them, store them in ChromaDB. The full script is in my knowledge-base repo.

3. Point it at your content

YouTube transcripts are easy:

yt-dlp --write-auto-sub --sub-lang en --skip-download \

"https://www.youtube.com/@your-channel"Markdown files just need to be in a folder. The script recursively finds them.

4. Query away

uv run build-kb.py --query "your question here" -n 10The embedding model runs locally. No API keys needed after you pull the model. Completely private—your content never leaves your machine.

The Meta Layer

There’s something recursive about using AI to build the system that helps me leverage AI.

Claude Code helped me write the ingestion script. It helped me debug the VTT parsing for YouTube transcripts. It helped me analyze the topic clusters. Now the knowledge base feeds context back into Claude Code when I’m working on new content.

The tools build the tools that improve the tools.

That’s the pattern I keep returning to. Not “AI writes my content” but “AI amplifies my ability to create and connect my own content.” The knowledge base doesn’t have opinions. It has receipts—everything I’ve said, searchable by meaning.

For someone building a personal brand, that’s the foundation. Know what you’ve said. Build on it. Be consistent AND repetitive but in unique ways. Let the system remember so you can focus on what’s new.